|

Niall L. Williams

PhD Student

|

[About Me] [News] [Publications] [Service] [Teaching] [Fun Stuff]

About me

I am a PhD candidate in computer science at the University of Maryland, College Park. I am a member of the GAMMA lab, where I work with Dr. Dinesh Manocha, Dr. Aniket Bera, and Dr. Ming C. Lin. My research interests include virtual/augmented reality, human perception, computer graphics, and robotics (in that order). For my dissertation, I am developing robust methods to enable exploration of large virtual environments in arbitrary physical environments using natural walking in VR. To this end, I use techniques from visual perception, robot motion planning, computational geometry, and statistical modeling to develop rigorous algorithms for steering users through unseen physical environments.

I graduated with a B.S. with High Honors in Computer Science from Davidson College. During my time at Davidson, I was a member of the DRIVE lab, where I was advised by Dr. Tabitha C. Peck. My undergraduate thesis studied redirected walking thresholds under different conditions and how we can efficiently estimate them.

My name is pronounced in the same way that you pronounce "Nile."

In my free time, I mostly enjoy drawing and competitive video games (DotA 2 and Tetris).

News

- Feburary 2024: Visited the Graphics & Imaging Lab @ Uni. of Zaragoza to give a guest talk!

- January 2024: Started an internship on the Human Performance and Experience team at NVIDIA Research!

- December 2023: One TVCG paper accepted to IEEE VR 2024!

- August 2023: Joined the SIGGRAPH History Committee!

- January 2023: One paper accepted to IEEE ICRA 2023!

- All news...

Journal and Conference Publications

|

Perceptual Thresholds for Radial Optic Flow Distortion in Near-Eye Stereoscopic Displays Mohammad R. Saeedpour-Parizi, Niall L. Williams, Tim Wong, Phillip Guan, Dinesh Manocha, Ian M. Erkelens Transactions on Visualization and Computer Graphics, 2024 Proc. IEEE VR 2024 [Paper] [arXiv] [Project Page] [Video coming soon] [Bibtex coming soon] We measured how sensitive observers are to image magnification artifacts in varifocal displays and to what extent we can leverage blinks to decrease their visual sensitivity, with applications to mitigating the vergence-accommodation conflict. |

|

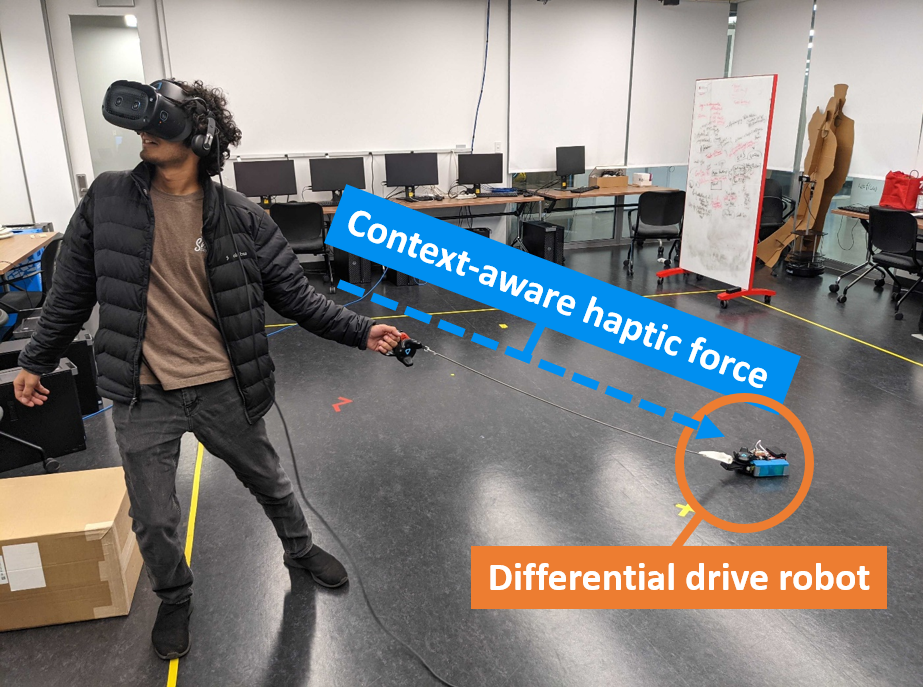

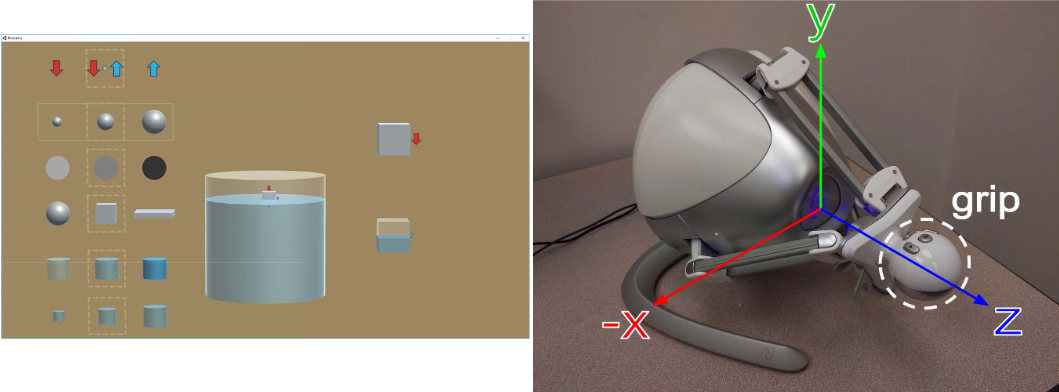

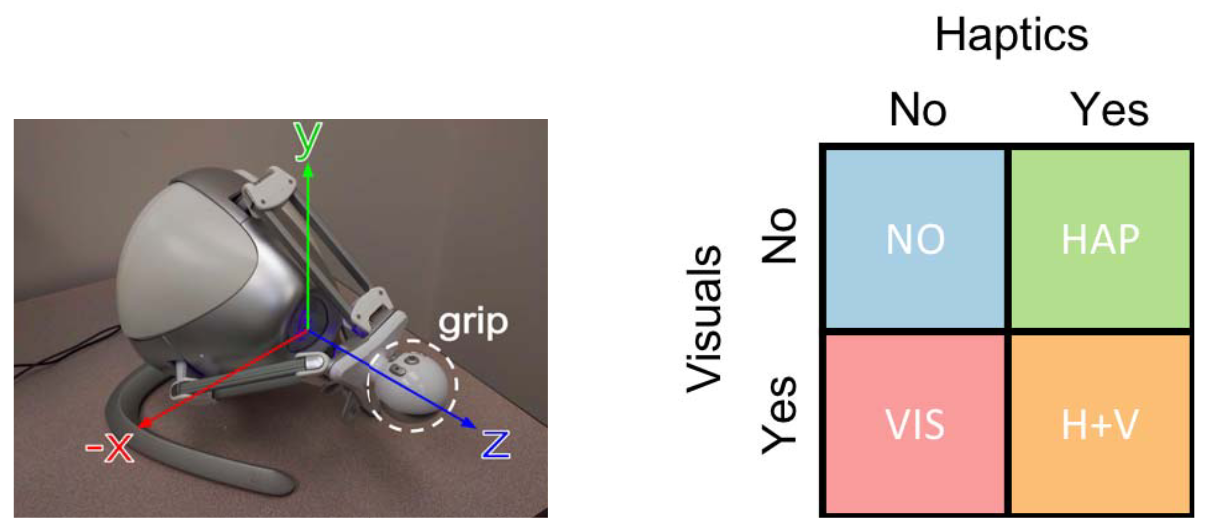

A Framework for Active Haptic Guidance Using Robotic Haptic Proxies Niall L. Williams*, Nicholas Rewkowski*, Jiasheng Li, Ming C. Lin IEEE International Conference on Robotics and Automation (ICRA), 2023 [Paper] [arXiv] [Project Page] [Video] [Bibtex] [DOI] We used a robot to proactively generate context-aware haptic feedback that influences the user's behavior in mixed reality, to improve the immersion and safety of their virtual experience. |

|

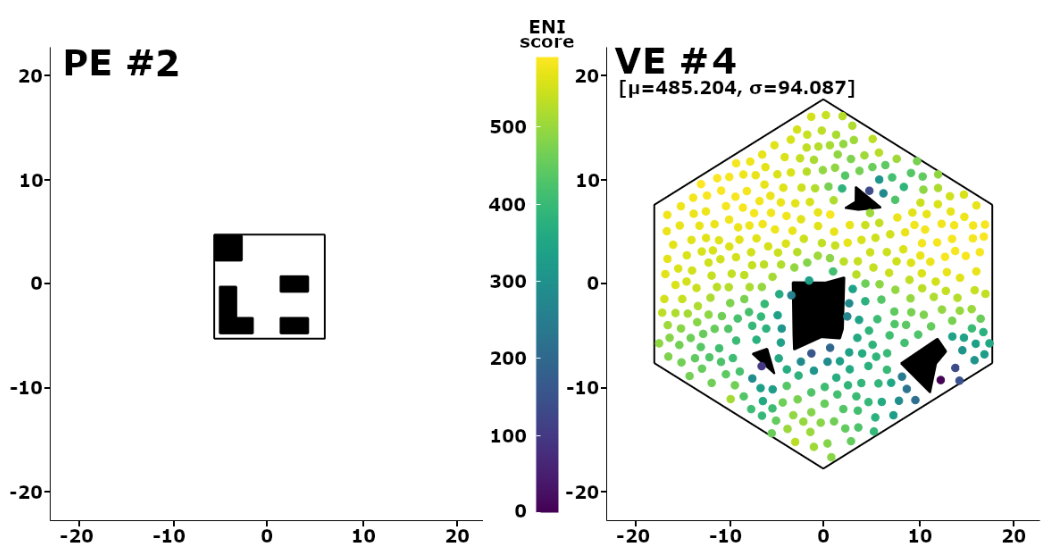

ENI: Quantifying Environment Compatibility for Natural Walking in Virtual Reality Niall L. Williams, Aniket Bera, Dinesh Manocha IEEE Conference on Virtual Reality and 3D User Interfaces (IEEE VR), 2022 [Best Paper Honorable Mention] [Paper] [arXiv] [Project Page] [Video] [Code] [Bibtex] [DOI] We provide a metric to quantify the ease of collision-free navigation in VR for any given pair of physical and virtual environments, using geometric features. |

|

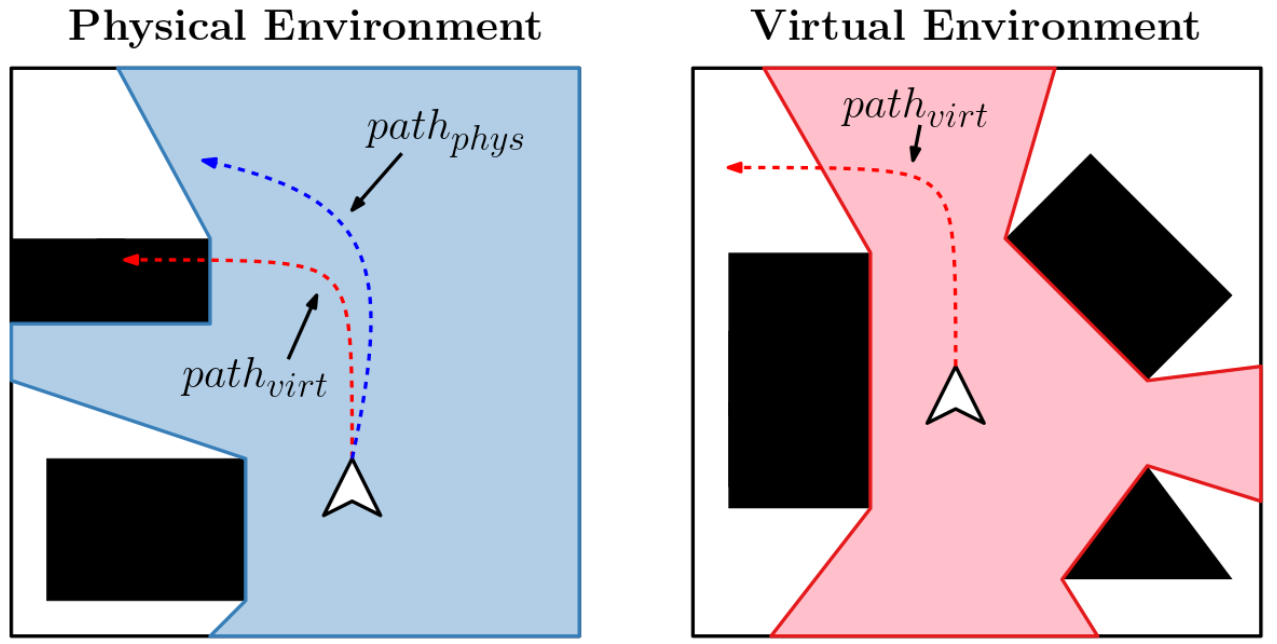

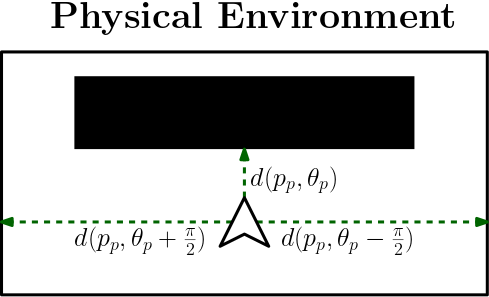

Redirected Walking in Static and Dynamic Scenes Using Visibility Polygons Niall L. Williams, Aniket Bera, Dinesh Manocha Transactions on Visualization and Computer Graphics, 2021 Proc. IEEE ISMAR 2021 [Best Paper Honorable Mention] [Paper] [arXiv] [Project Page] [Video] [Code] [Bibtex] [DOI] We formalize the redirection problem using motion planning and use this formalization to develop an improved steering algorithm based on the similarity of physical and virtual free spaces. |

|

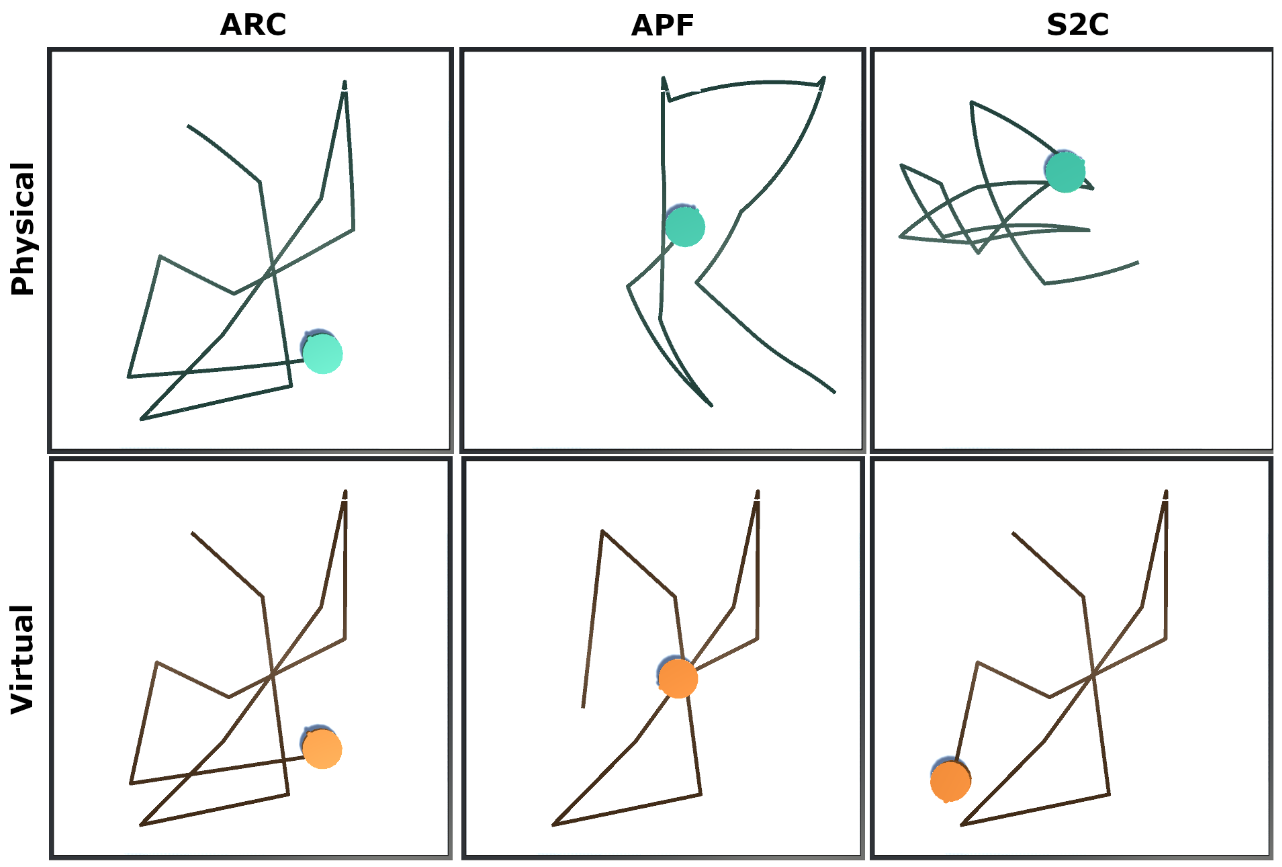

ARC: Alignment-based Redirection Controller for Redirected Walking in Complex Environments Niall L. Williams, Aniket Bera, Dinesh Manocha Transactions on Visualization and Computer Graphics, 2021 Proc. IEEE VR 2021 [Best Paper Honorable Mention] [Paper] [arXiv] [Project Page] [Video] [Code] [Bibtex] [DOI] We achieve improved steering results with redirected walking by steering the user towards positions in the physical world that more closely match their position in the virtual world. |

|

PettingZoo: Gym for Multi-Agent Reinforcement Learning J. K. Terry, Benjamin Black, Mario Jayakumar, Ananth Hari, Ryan Sullivan, Luis Santos, Clemens Dieffendahl, Niall L. Williams, Yashas Lokesh, Caroline Horsch, Praveen Ravi Neural Information Processing Systems (NeurIPS), 2021 [Paper] [arXiv] [Code] [Bibtex] One of the most popular libraries for multi-agent reinforcement learning. |

|

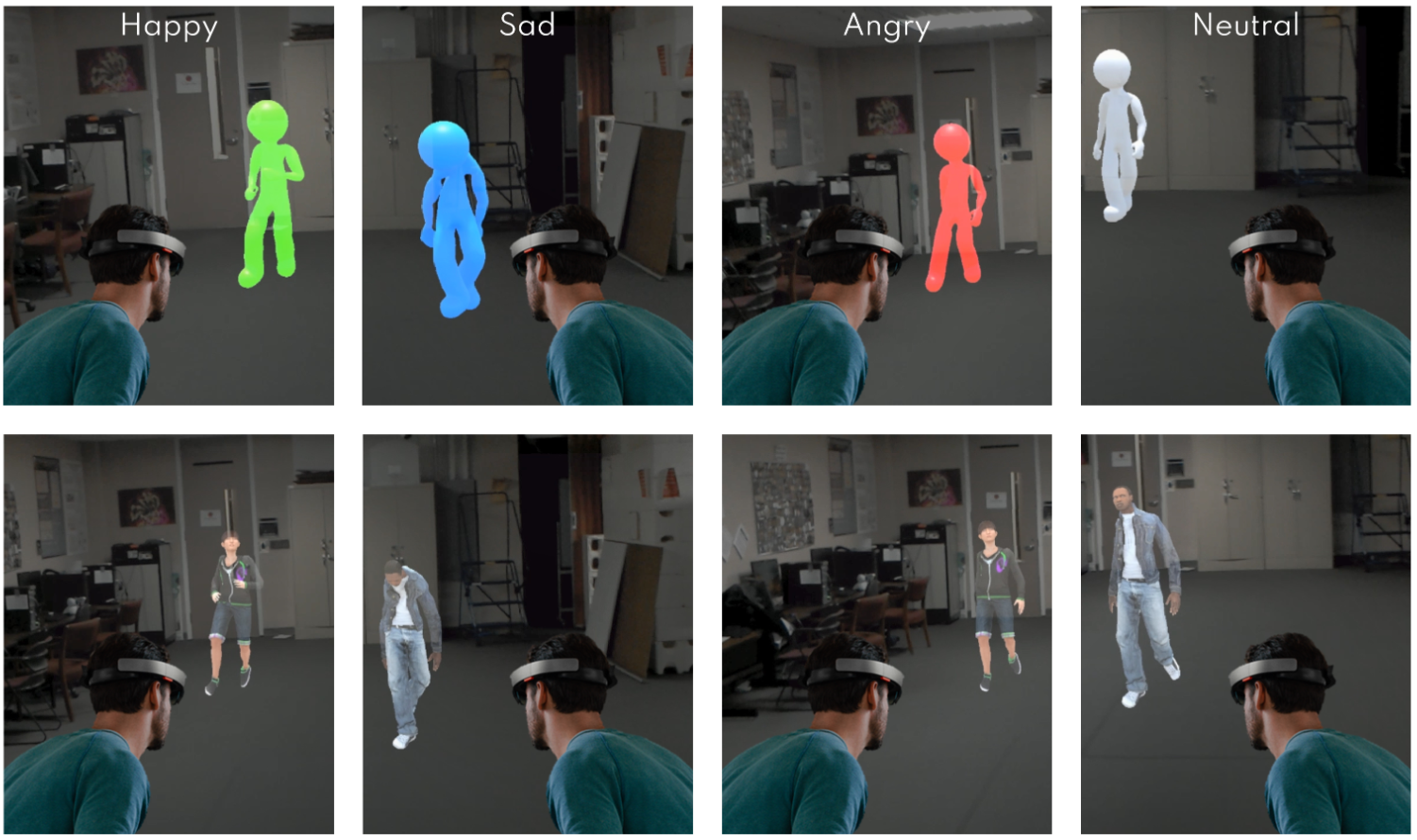

Generating Emotive Gaits for Virtual Agents Using Affect-Based Autoregression Uttaran Bhattacharya, Nicholas Rewkowski, Pooja Guhan, Niall L. Williams, Trisha Mittal, Aniket Bera, Dinesh Manocha IEEE International Symposium on Mixed and Augmented Reality (ISMAR), 2020 [Paper] [arXiv] [Project Page] [Video] [Code] [Bibtex] [DOI] We automatically synthesize emotionally expressive gaits for virtual avatars using an autoregression network. |

|

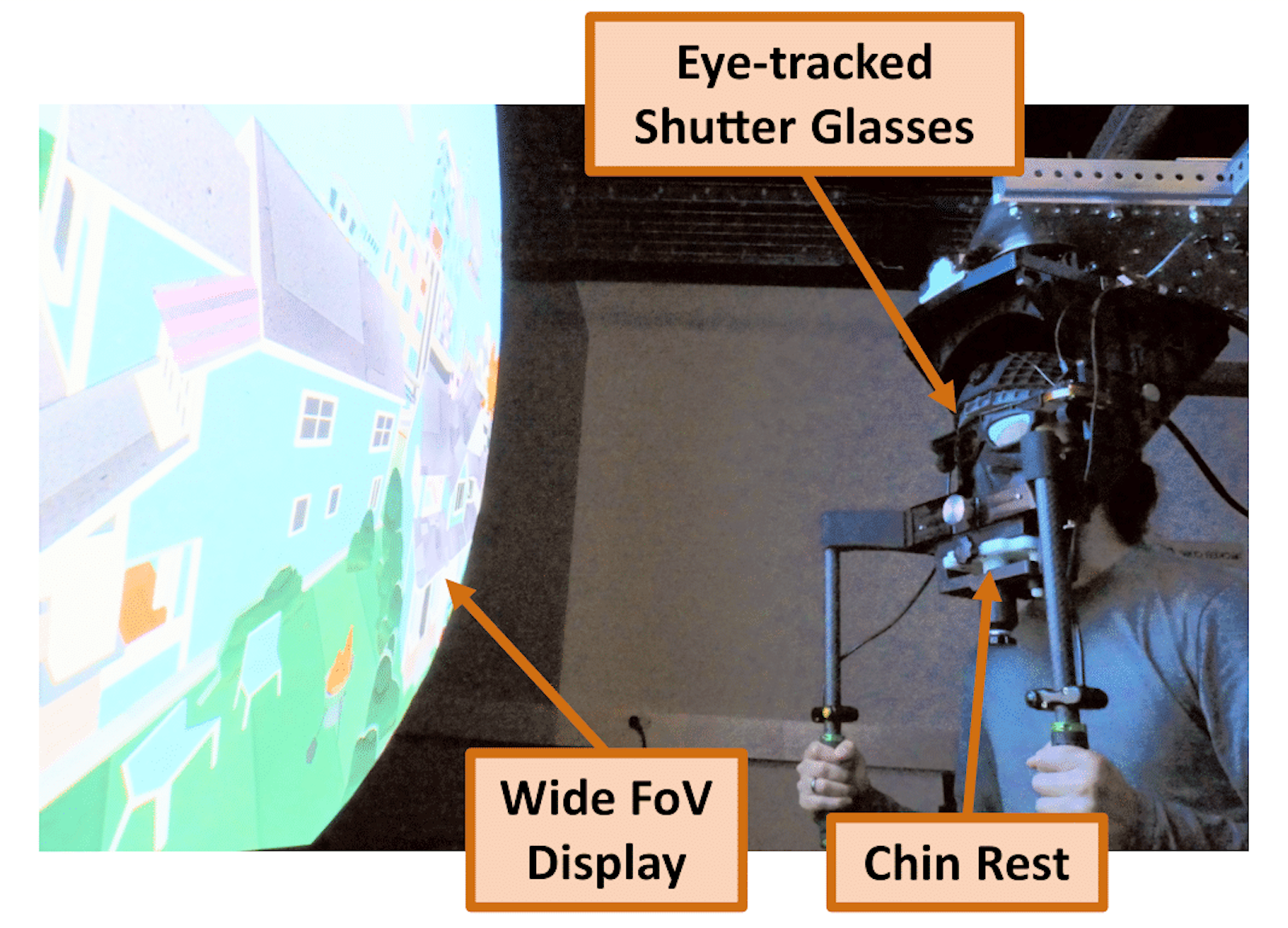

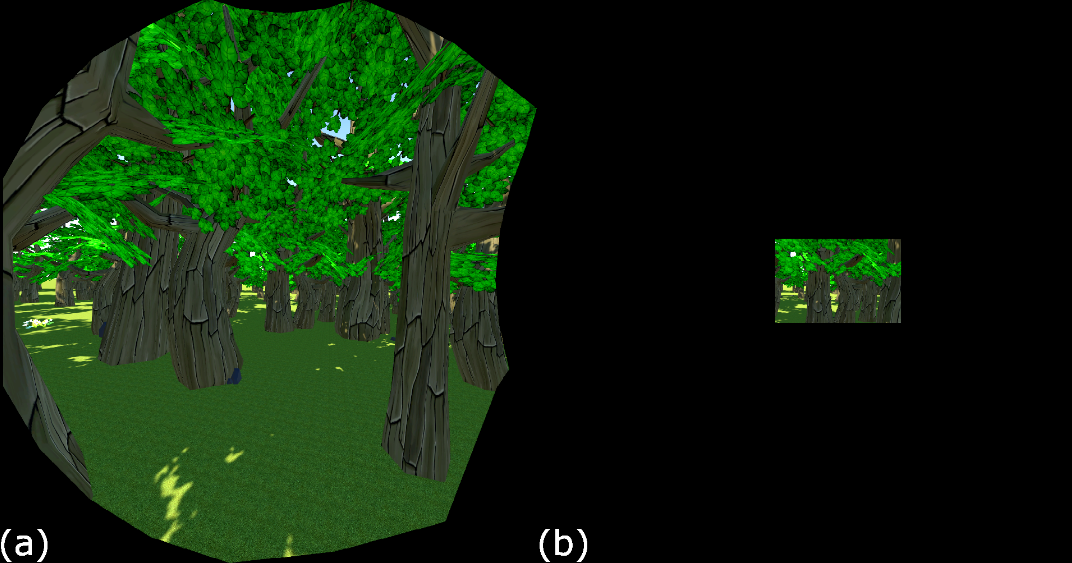

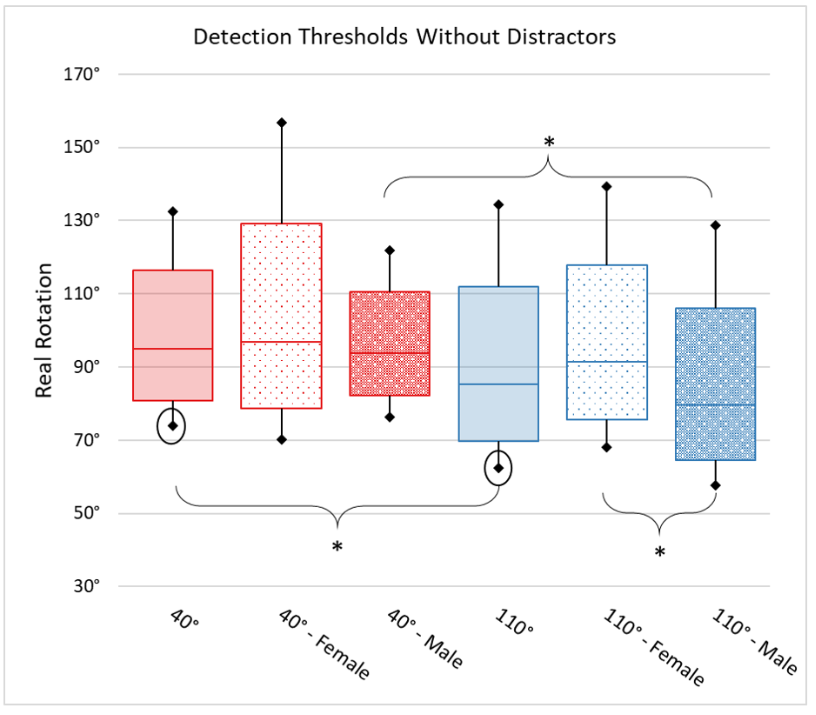

Estimation of Rotation Gain Thresholds Considering FOV, Gender, and Distractors Niall L. Williams, Tabitha C. Peck Transactions on Visualization and Computer Graphics, 2019 Proc. IEEE ISMAR 2019 We measured perceptual thresholds for redirected walking and found that the user's tolerance for redirection depends on the field of view, the presence of distractors, and their gender. |

Workshop Papers and Posters

|

Redirection Using Alignment Niall L. Williams, Aniket Bera, Dinesh Manocha IEEE VR Locomotion Workshop, 2021 We provide a general framework for how alignment can be used in redirected walking to steer the user towards similar physical and virtual positions. |

|

Augmenting Physics Education with Haptic and Visual Feedback Kern Qi, David Borland, Emily Jackson, Niall L. Williams, James Minogue, and Tabitha C. Peck IEEE VR 5th Workshop on K-12+ Embodied Learning through Virtual & Augmented Reality (KELVAR), 2020 Using haptic force feedback to help teachers better understand physics concepts. |

|

The Impact of Haptic and Visual Feedback on Teaching Kern Qi, David Borland, Emily Jackson, Niall L. Williams, James Minogue, and Tabitha C. Peck IEEE Conference on Virtual Reality and 3D User Interfaces, 2020 |

|

Estimation of Rotation Gain Thresholds for Redirected Walking Considering FOV and Gender Niall L. Williams, Tabitha C. Peck IEEE Conference on Virtual Reality and 3D User Interfaces, 2019 |

Invited Talks

|

ARC: Alignment-based Redirection Controller for Redirected Walking in Complex Environments Niall L. Williams SIGGRAPH TVCG Session on VR, 2021 [Video] |

Service

- Academic community

- SIGGRAPH Research Career Development Committee 2021 - Present

- Peer reviewing: IEEE TVCG (2021 - present), IEEE VR (2020 - present), IEEE ISMAR (2021), IEEE Trans. on Games (2021), MobileHCI (2021), ACM CHI (2022)

- Student volunteer: IEEE VR (2020, 2021), IEEE ISMAR (2019)

- University of Maryland, College Park

- Graduate admissions application reviewer 2019 - Present

- Girls Talk Math summer camp problem set reviewer 2021

- Graduate school application mentor 2020

- Davidson College

- Math & CS department student representative 2018 - 2019

- Davidson College ACM chapter co-founder 2018 - 2019

Teaching

I greatly enjoy teaching since it's a combination of some of my favorite things: talking about computer science, introducing people to computer science, and learning. I would like to see more diverse groups of people become active in the computer science community, and I think teaching is an important step towards that. It's important to me that everyone has an equal opportunity to learn, so I try my best to be welcoming and unassuming about people's prior knowledge. I learned a lot about teaching from my time as an undergrad at Davidson, and I deeply agree with their approach to teaching.

- University of Maryland, College Park

- CMSC838C TA (Advances in XR) Spring 2023

- CMSC838C TA (Advances in XR) Spring 2022

- CMSC420 TA (Advanced Data Structures) Fall 2021

- CMSC423 TA (Bioinformatic Algorithms, Databases, and Tools) Spring 2021

- CMSC420 TA (Advanced Data Structures) Fall 2020

- CMSC425 TA (Game Programming) Spring 2020

- CMSC420 TA (Advanced Data Structures) Fall 2019

- Davidson College

- Computer Science Tutor 2018 - 2019

- Computer Science Head TA Spring 2019

- Computer Science Grader 2017 - 2018

Fun Stuff

A collection of random bits of info about me or things I find interesting.

- While it's true that I'm a published author, I do not write novels.

- My typing speed is about 92 WPM.

- I walked my dog during a hurricane once (nature calls!).

- I have gone scuba diving with sharks in open water (no cage!).

- I had a cast on my left arm and right leg at the same time for 6 weeks.

- I won the only poker game I ever played. I no longer remember how to play poker.

- Some of my favorite pictures of my cat:

Img 1

Img 2

Img 3

Img 4

Img 5

Img 6

Img 7

Img 8

.

- My undergrad thesis advisor and second reader made watching all episodes of Seinfeld a requirement to qualify for honors.

- I once spent a summer helping newly-hatched sea turtles safely reach the ocean.

- My Erdős Number is 4 (Me → Dinesh Manocha → Pankaj K. Agarwal → János Pach → Paul Erdős)

Useful resources:

- LaTeX abstract extractor

- Time conversion for anywhere-on-earth.

- Poster templates

- SIGACCESS guide to making your PDFs accessible to people with disabilities.

- SIGACCESS guide to making your presentations accessible to people with disabilities.

- Article about how to get involved in research as an undergraduate student.

- Books for graduate students.

- Math for computer graphics.

- Tour of computer graphics.

- Statistical test cheat sheet (UCLA).

- Statistical test cheat sheet (Andy Field book).

- Color scheme resource.

- Advice for CS PhD students.

- 10 tips for academic talks.

- How to speak.

- How to title a paper, by Jitendra Malik

- How to organize a presentation by Theodore Kim.

- Accepted and rejected grant application examples.

- Secrets to writing a winning grant

- What to know before going into an interview

- How to organize your calendar

- Latin square calculator

- USA CS PHD FAQ

- Information on asking about name/gender on data-collection forms.

- Guide to postdoc

- Bibtex cleaning tool

- Applying to Ph.D. Programs in Computer Science

- Demystifying the American Graduate Admissions Process

- Nifty assignment ideas

- Online "course" for learning graphics programming.

- Automatically generate and add subtitles to a video.

- Visual Angle (Field of View) Calculator

- Sabine Hossenfelder: I'm now older than my father has ever been

- Paul Graham: How to do great work

- Crystal Lee: Salary + negotiation notes for academia

- Math for 3D graphics.

- Unit Circle angle visualizer

- 10-minute Physics for Computer Graphics

Fun reads/cool things:

|

|